Upon What Evidence Are 'Evidence-Based' Practices Based?

In the initial installment of our Evidence-Based blog series, we asked what the term ‘evidence-based’ really meant and gave some general background on the history and use of the term in regards to treatment for substance use disorder and other behavioral conditions. In today’s article, we will delve more deeply into the categories and specific types of evidence upon which the practices are based.

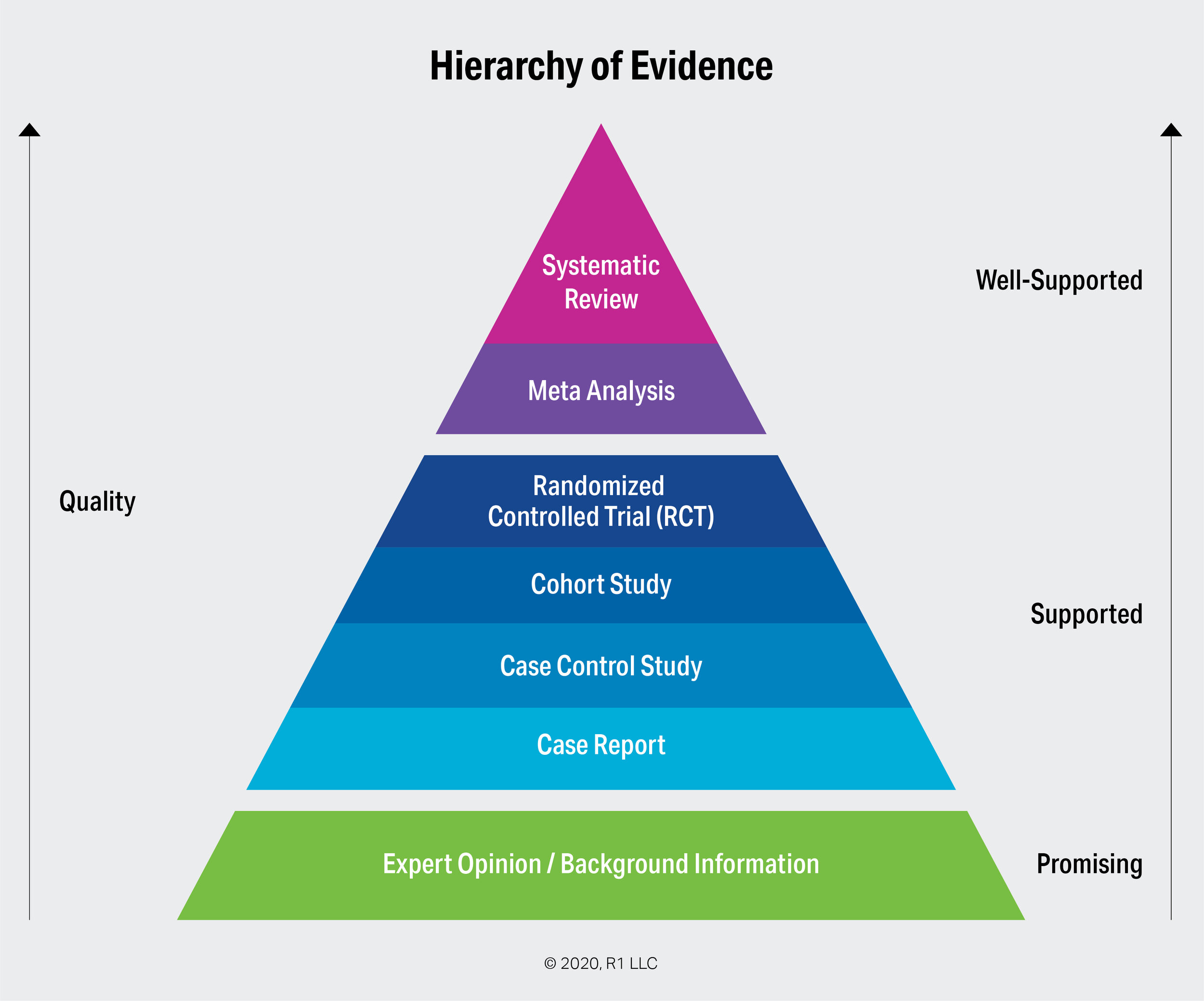

The term ‘evidence-based’ seems quite straightforward. However, there are a lot of proverbial devils in the details of qualifying, quantifying, and appraising the evidence. Looking at a typical ‘Hierarchy of Evidence’ provides a good overview of the types, strength, and quality of the evidence and opens the door to tackle some of the considerations in assessing evidence-based practices.

The Hierarchy of Evidence - Types and Categories

Wikipedia states there are more than 80 common hierarchies for assessing evidence just in healthcare practices. The image above is our own, but the categories and types line up with the main types of evidence and their placement in the various hierarchies. The primary types of evidence in the hierarchy are:

Systematic Review: A systematic review collects, appraises, and summarizes all the available and pertinent literature on a particular practice, treatment, or intervention. Because a systematic review is comprehensive and the inputs are critically reviewed, it is consistently placed in the top of the hierarchy of evidence.

Meta-Analysis: Alone or as part of a systematic review, quantitative datasets are collected, analyzed, and aggregated into a meta-analysis. The aggregation of the findings of multiple studies means the conclusions of a meta-analysis hold greater statistical significance than those of any of the included single studies. Moreover, because a meta-analysis is data-driven it typically suffers fewer sources of bias than observational studies.

Randomized Controlled Trial (RCT): RCTs are structured, comparative, controlled experiments in which two or more interventions are studied across groups of individuals who are randomly assigned to receive the interventions. The randomization reduces selection bias and other judgemental errors. Although RCTs are in the second tier of the pyramid above, it is not unusual to find hierarchies which place RCTs in the top level.

Cohort Study: A cohort study is an observational study, meaning the researchers simply observe and do not intervene. Cohort studies recruit and follow a group (cohort) of participants who share some common characteristic and then compare outcomes with a cohort that does not share that characteristic.

Case Control Study: Similar to a cohort study, a case control study identifies a group of people who have experienced an outcome (the cases) and compares it with a group that did not experience that outcome (the control). The cases and controls are then compared with respect to exposure to a factor or group of factors. If the ‘cases’ have a substantially higher incidence of exposure to a particular factor compared to the ‘control’ subjects, it suggests an association.

Case Report: A detailed report on the symptoms or signs, diagnosis, treatment, and follow-up of an individual. Because they typically consist of a single or small group of cases, case report evidence is considered to be of lower quality than larger group studies. However, case reports are usually the first type of study to be performed and often provide the earliest source of evidence of the effectiveness of a practice.

Expert Opinion / Background Information: At the base of the evidence pyramid are expert opinion and background information. Expert opinion does not just mean the views of a single, highly-regarded individual. It more often means a ‘professional consensus’ or the generally accepted guidelines published by an oversight body.

Considerations in Assessing the Best Research Evidence

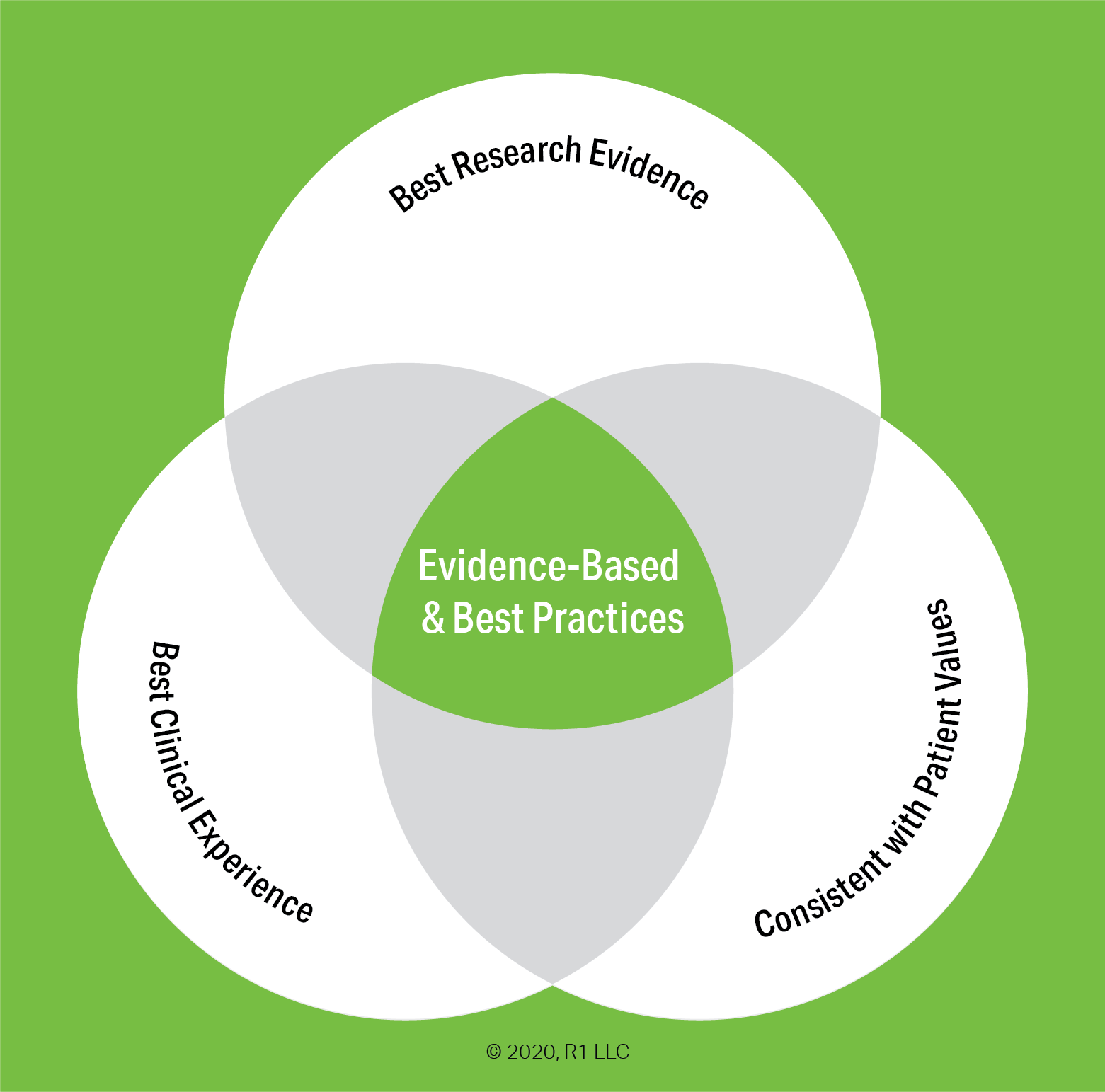

As the discussion above indicates, there is a very wide range of evidence type, quality, and strength that is considered sufficient to earn an “evidence-based” tag for a practice. However, there is more to assessing the evidence than just checking a box along the hierarchy.

For starters, the rank ordering of evidence types in the hierarchy implies a precision that isn’t as absolute as it may appear. The order of the evidence types is best thought of as a set of guidelines. In our last Evidence-Based blog post we cited the example of a parachute. There have been no randomized controlled studies of the effectiveness of parachutes when jumping from an airborne plane. It would be both unethical and impractical to randomly assign people to a control group that would not have a parachute. So case reports, cohort studies, and expert opinion are held as sufficient conclusive evidence of the benefits of utilizing a parachute.

Systematic reviews and meta-analyses are considered the ‘gold standard’ for evidence-based practices because of their structure, rigor, and comprehensive nature. However, both are subject to judgemental errors in design, appraisal, and selection of inputs. The conclusions of a meta study will only be as good as the quality of the constituent studies included in the analysis. A single, well-designed, well-run RCT of a diverse population will likely produce more relevant and higher quality findings than a meta-analysis of smaller or poorly-designed studies.

Demographics and population types are a major consideration in judging the suitability of an evidence-based practice. For instance, the Mesa Grande Project, a well-known meta-analysis of various treatment modalities for alcoholism, surveyed hundreds of clinical studies and included over 70,000 subjects (1). However, only 15% of those subjects were female and many of the constituent studies included only men. This imbalance in the population doesn’t mean the findings are invalid or are the result of overt bias. The proportion of females involved in all clinical trials during this period was consistent with that of the Mesa Grande study and males sought treatment for alcoholism at a far higher rate than women during the years the analysis covered. Otherwise, the study was comprehensive, rigorous, multi-faceted, and produced robust conclusions. Nonetheless, the overall recommendations may ignore gender differences in outcomes and those operating women-only programs might find a smaller, gender-specific study offers a more suitable and effective alternative for their population.

Finally, there is the big issue of time. It’s tempting to jump to the top of the pyramid and say, “I only want those well-supported practices with evidence from systematic reviews and meta-analyses!” However, the absence of such evidence does not necessarily mean a practice is less effective. Because they are comprehensive, systematic reviews and meta-analyses often take many years to complete. Similarly, cohort and case control studies often involve following and observing subjects for a number of years before drawing conclusions. Practices that are supported only by expert opinion, professional consensus, or case studies are not, by definition, less effective strategies. They may simply have not yet been subject to intensive research.

This is particularly true in the areas of substance use disorder and mental health. These fields developed separate from general medical treatment and have also traditionally lacked the economic incentives that have driven research in primary healthcare. Times are changing, but understanding the strength, quality, type, and details of the evidence base will remain a necessity

Questions to Explore and Next Steps

Thinking about your own practice, program, or organization, as you look at the following questions:

What is the evidence for the evidence-based practices are you currently using?

Who in your organization considers the evidence when assessing treatment strategies?

What barriers or obstacles have you encountered in embracing or implementing evidence-based practices?

The next post in this series will look at the main evidence-based practices for substance use disorder and dive into the specific research evidence behind them.

Until then, please take a look at the content on our new Evidence Base page. We have curated a selection of papers on evidence-based practices for substance use disorder in general, as well as some studies on implementation. We will be adding sections on the evidence for specific treatment modalities.

(1) Miller, W. R., & Wilbourne, P. L. (2002). Mesa Grande: A methodological analysis of clinical trials of treatment for alcohol use disorders. Addiction, 97(3), 265–277.